Abstracting The Earth So That Cars Can Communicate

A shared understanding of the road

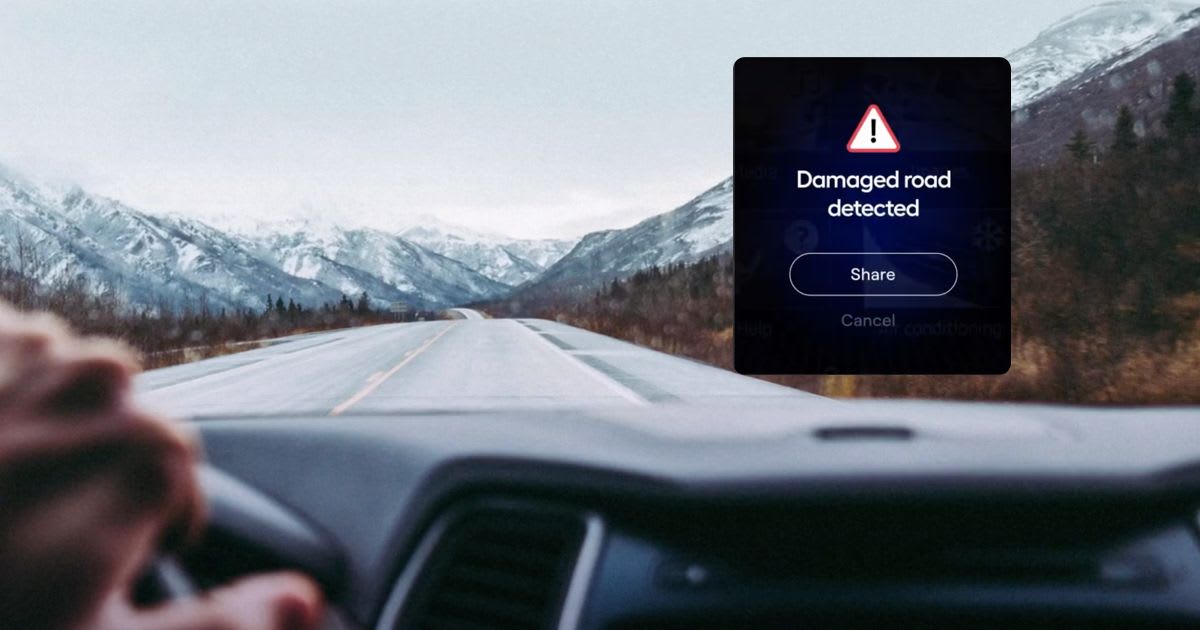

What if cars could “communicate” to create a common understanding of the road they are in?

Actually, they already do.

You’re driving: you see a brake light ahead. You brake instinctively, trying to avoid a rear-end collision. This is basic communication, and it’s very effective: the car you saw braking conveyed a message — something ahead required it to slow down or perhaps stop.

There is one important nuance around this type of communication: it is limited by line-of-sight. The brake light (or turn signal) is seen by the cars that can see the signalling car. The message is a simple “me” message — as in saying “I am braking”. It doesn’t relate to a broader issue on the road ahead. Yet, if several cars were to communicate different “me” messages and the context of those images was unified somehow, a “we” consciousness can form — about the state of the roads and the real time safety of driving on them. If there is no common context, then in driving all you would get is a plethora of confusing “me me me” messages from cars you cannot see, which would be difficult to make any sense of. That would be like being bombarded with many brake signals, where you can’t really place them in your surroundings — and not being able to tell what their relevance is. Another way of thinking about this is as follows: if line-of-sight involves vision, then when we hear honking (our auditory sense) we get more information about something that is happening around us, even if we don’t see it.

Cars are getting smarter and will soon incorporate an increasing set of cameras. They will use on-board sensors (and cameras) much in the way people use vision to navigate. Even today, and especially with car cameras, these sensors create huge data streams that can complement single-car, line-of-sight, vision. These data streams can augment the understanding of the road beyond line-of-sight and allow us to make better decisions. It’s just like our sense of hearing adds to what we see on the road. Given that cars are acquiring cameras, this idea of a shared “we” becomes interesting since vision data, if brought and analyzed outside the car (we’ve written about some of the challenges of doing this here), can be the basis for such a collective consciousness of cars on the road — a swarm of crowd sourced vision.

So, How Can Cars Communicate? Some History

The potential of having connected cars help other cars has been understood for some time. Originally, when network messages were seen as a wireless extension of the brake-light or accelerometer, the role of the network edge (which carries this communication) was defined according to that understanding. It would be low-bandwidth and won’t require much computational power. At a minimum, cars would use the network edge (in this case wireless communications on the 5.9 Ghz band, this isn’t a cellular network bur rather 802.11p DSRC for lightweight V2V, an ad-hoc peer-to-peer network) to facilitate (DSRC/RSU) authenticated direct communications. Other approaches focused on relaying communications among cars by using existing cellular roadside infrastructure (CV2X). This approach creates direct car-to-nearby-cars (or to infrastructure, such as traffic lights) communication on the edge. It is worth noting many federal funds were and are invested in these types of projects, with the goal of radically increasing roadway safety and reducing fatalities.

These approaches, although never deployed at a large scale, worked only when data transmission demands were light. A virtual brake light could be transmitted but not an image “seen” by another car, not to speak of an AI detection that “understands” what was seen by another vehicle. In that sense, a brake that occurs near a road work zone would be transmitted, but the actual image of that work zone and the AI understanding that it is blocked, both of which require bandwidth and compute, would not be transmitted. Yet, as technology progresses, cameras are becoming integral to most vehicles, with most models in 3–4 years expected to have several integrated cameras. This means that the product of a video feed coming from many nearby cars in real time is what needs to be communicated between cars yet there isn’t any infrastructure to support the added safety because cameras require a heavier connectivity (i.e. broadband) and compute “budget”. So, to bring safety that’s inherent in car camera “shared vision” requires bandwidth and compute at the edge. This can bring better safety on a massive scale. And this requires a new approach that goes beyond “me” messages and converts them into a coherent “we” story.

The Role of the Network Edge: Our Common Ground/Earth

If images and videos require processing, then theoretically they need to be processed on the cloud. Yet, this approach isn’t feasible. No city can afford a direct upload of Terabits of vision data from all vehicles straight to the cloud (read here for some context). Yet most approaches focus on either direct car to car communications or cloud-centric processing.

We need to find a common ground — somewhere in between messaging from car to car to cloud based processing. In this middle ground the network edge is used as an aggregation buffer between the cars and the cloud. Some of the work is done on the endpoints (cars), some on the edge (think a 5G deployment) and some (the least possible) on the cloud. Combining those two perspectives requires a common ground, and that common ground is no other than the earth itself. When it is abstracted as a standard part of the network edge, using IP addressable hexagonal tiles for example, it not only doubles as a relay and data reduction buffer, but it also reduces dramatically the data plan costs associated with vision data by performing selective cherry-picked uploads of imagery data. This is because DSRC is Peer-to-Peer so N vehicles with M peers observing a hard brake will send out N*M events and N*M images; with the introduction of a Hexagonal broker we have N events only, and 1 or 2 cherry picked best representing images. This gives information that can be consumed. The pure P2P approach does not. Selective and scheduled vision data transfer flattens communication peaks, avoids overwhelming cell towers on busy segments with redundant duplicates, and leverages spare cell cycles.

The underlying assumption is that there are varying degrees of car compute-storage capabilities.

- Car-cams capture a tremendous amount of data while driving and can process and store this data — to an extent.

- Some of the processing has to happen in the context of other data which is not present in the car. Detections (of a fallen tree, accident, dangerous roadside conditions) by other cars for the same location needs to be localized, correlated and corroborated.

- Outside the car, there should be a registry that is kept per tile for who needs to be informed about new detections: other cars, a mapping application, a parking application, a smart city etc. That’s why the abstraction of the earth is integral to auto-edge. Every tile of the road has an addressable twin object in the edge that stores the content uploaded — source-routed from that road location to its edge twin. This twin functions as a twitter-like feed of curated data to relevant followers right now, think of the followers of El Camino San Antonio junction or the 555 5th avenue segment.

- Perhaps the most powerful feature of the edge earth abstraction is the optimal use of the network itself. The edge keeps track of which car saw what tile of road and curb, and when. By acting as a ledger the edge can selectively fetch vision data based on merit and actual need. This cherry picking flatten fleets’ data-plans, and optimizes the use of car compute-storage. Content can periodically be purged from the car to make room for more rides without leaking value.

(if you’re interested you can read some more about the relative costs of endpoint, edge and cloud compute/bandwidth here)

This standard auto-edge abstraction is called network-hexagons. Hexagons are nature’s own tiling method for surfaces with clearly defined neighbors, other tiles impacted by detections on a given tile. The interface for mobile edge source-routing is available at: