It’s All Corner Cases: Teaching Computers to Drive Safely

Also introducing our latest data challenge for autonomous developers`

It could be argued there is only one proven Big Data application — web search. Nothing so far has met the sheer size and complexity of indexing the web at the precision, recall, and freshness Google delivers. In its quest to structure the web well beyond text documents, around 2011 Google realized it had to fundamentally change the way it was indexing images. Google’s DistBelief system — the inception of the newly formed Google Brain team — pushed the boundaries of how deep learning could be applied to massive problems by training on a highly distributed configuration of thousands of CPUs. The publication of this system marked a key milestone for Google and the tech industry at-large. By applying the deep learning techniques Geoff Hinton and Yann LeCun had been researching for over a decade, Google was finally able to create a production system that could scale to understand and structure information from images.

As much as we talk about the advances of machine learning, AI and deep learning, nothing so far has rivaled what Google achieved. During my tenure as a Sr Fellow at Yahoo, I long argued the only company that truly had solved a deep learning problem (and by its nature, a BIG data problem) was Google (also, maybe Facebook?). All other companies were practitioners reusing either infrastructure, model architectures, third-party training data, or even pre-trained models. Only Google has created and given access to all the necessary components to move the state-of-the-art in the industry. We see deep learning being touted left and right, and the number of startups now heading toward that frontier is astonishing. The automotive space is no different. Many, many companies are trying to replace the human at the wheel and competing in a shift which could represent a return of trillions of dollars.

But how did Google manage this? And what do we, in the automotive field, have to learn from it?

While the Google way is probably the only proven success in building true big data deep learning applications (emphasis here being on truly BIG), most companies are not trying to replicate “the Google way” and thinking at scale. Rather they are doing it the wrong way, the unproven way, and what I refer to as the “small way”. There are two things that propelled Google engineers forward at a speed nobody else could achieve — training with terascale datasets and continuous experimentation. I would argue that today most AI companies suffer from one or both of two symptoms: (1) building models using expensive, small, manually annotated datasets and (2) taking months — or even years — to go from model development to production. This is the antithesis of search, i.e. the proven way.

Be Obsessive About Data Collection

Google’s engineers are obsessed with indexing more data than anybody else. Google believes every single web page should be indexed. Period. It’s an obsession, not an approximation. By being relentlessly focused on every last web page, the rarest n-grams, the longest query and the least frequent dictionary term (aka the tail), Google exponentially distanced itself from its competitors. As time went on, it became harder — and eventually impossible — for Yahoo and Bing to catch up. Google had established its moat. The moat attracted advertisers, advertisers brought money, and money allowed Google to go much further down the tail.

Google learned it could not make sense of the web with traditional natural language processing (NLP). It was just way too hard at this scale. To be able to index such a beast, you’d would need new models, infrastructure and ways to annotate the data. Figuring out how to annotate all data, billions of web pages initially, and later trillions of clicks and pageviews constituted Google’s biggest secret.

Have Your Users Tag All Data

Google did not hire editors, annotators or labelers to make sense of web pages. Google leveraged its users to label the data — all data. Google figured that in order to make real advances in (deep-learning) prediction models it needed all data to be annotated — and only end-users could do that effectively. Google changed what they understood as success, moving away from NLP to actual end-user engagement. It opened the door to fully leveraging the users’ implicit actions to label the web index.

Repeatedly, Google has figured out how to formulate problems so it can leverage users to label data. The now famous PageRank algorithm was an example of this, leveraging the inbound-outbound flow link of web pages to score the relevance of a term, beyond what had been done traditionally using just term frequency. Beyond PageRank, clicks represented the next best proxy for engagement together with dwell time, bounce rates, etc. all used to “describe” web pages. YouTube videos are indexed using the description and comments. Images are indexed using links, comments, titles, EXIF data. Faces are indexed by users by a combination of a utilitarian need and vanity.

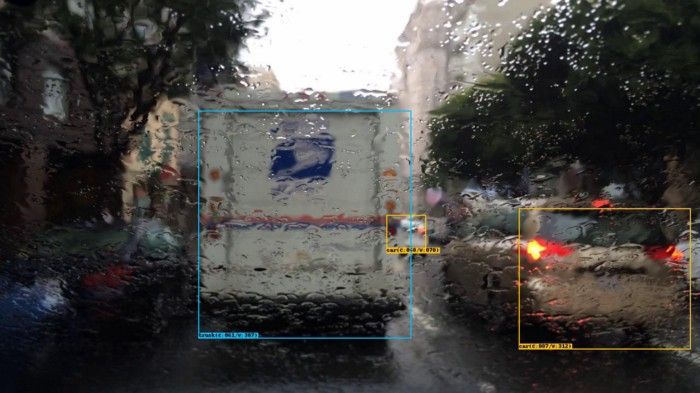

In comparison, if we look at AI startups, few are taking these steps. First, we should always collect as much data as possible, likely more than we would otherwise be comfortable. Second, we must devise methods for users to implicitly annotate everything. For example, when the user presses the brakes hard, they are labeling a video sequence as dangerous. Or when we show a warning and user does not react, the user is implicitly telling us it is a false positive (or potentially distracted). Third, this is not a one-time battle, we must keep going down the long tail in unique driving situations. How useful is an ADAS system if it doesn’t operate at night and detect cyclists for a taxi driver who only drives nights in Manhattan, when the cycling couriers are most dangerous? One of the interesting consequences is the entropy or information gain of these systems is not finite, but rather infinite. As we look at autonomous driving, many companies are: (1) not able to collect data, (2) not able to tag data at scale or (3) not able to address edge and corner cases.

Test in the Field, Not in the Lab

Google learned along the way that testing models can’t happen in the lab. Flickr had introduced a similar concept for engineering earlier on. Flickr engineers were doing over 50 code releases into their production website — just the website. Engineers were not spending a lot of time testing on dev environments, but rather bugs were introduced and fixed directly in production. The secret was doing this fast, very fast. That whole practice merged later with continuous integration and some termed this process “continuous development”. At the end of the day, Flickr engineers learned that the complexity of these systems only increases with time and either you accept this or you fight it. If you fight it, you’re in for an uphill battle. Maybe unit tests give you a warm cozy feeling, but they don’t solve the problem. Instead of fighting the problem, Flickr engineers accepted it and pushed it to production. By leveraging users in their testing, Flickr was able to move fast.

Google had already developed a culture of leveraging its users to understand the data, so from there to leveraging users to test their models made sense. Google started breaking apart the web search experience into buckets. Users were assigned onto a bucket, and the number of buckets running in parallel at any point in time was only limited by the minimum statistical significance required. A bucket encapsulated a full experiment (at a later point in time, Google would also introduce multi-variate testing), from data (yes, more data, different data, data augmentation) to models to business logic or UI changes. At any point in time there would be hundreds of buckets on production, each testing a small variation. Why do this with users instead of the lab? In the lab, we could look at log-loss, precision, recall, F-score and all sort of fancy statistical tests. But would a good fit in the lab mean a good fit in production? Or vice versa, and critically more important, does a bad fit in the lab perform poorly in the real world? The answer is that any bucket can perform well and any bucket can perform badly. Lab data is biased by definition and only represents a small subset of the population, even with random sampling applied.

In driving, how many annotations do we need to build a double-parking detector? How do we find these frames to begin with? Assuming one can find one double-parked vehicle in every 30 mins of driving, and we are exposed to the vehicle at most 10 seconds, that’s a 1:180 exposure. We need 180,000 frames annotated to produce 1,800 frames with double parking to even start training a classifier to recognize double-parking. Now, what if this is by night? And it’s snowing? And we are not in Manhattan but in Moscow? How many manually annotated samples do we need to build a robust double-parking detector? And if we decide to make it only work for the top 20% of the cases, in daylight in Manhattan, aren’t we doing exactly what Yahoo and AOL did in display advertising, targeting the head and ignoring the tail? Rather, if we observe — through our users in the field — a slow down, a vehicle blocking the lane, a change of lane, an increase in speed, as a sequence of events, and we do this over many data collection points, we will own a training set for double-line parking that is significantly more robust, and exponentially scalable.

What We’re Learning at Nexar About Data Collection and Use

Collecting driving data is expensive. Cars are not hooked up to a data center and we need to be selective of what gets transferred over cellular. Some try and compensate for the lack of driving data with simulation. However, simulation is not the answer, as it doesn’t allow you to solve problems you don’t know (remember the truism: you don’t know what you don’t know). Many experts argue that Automation Level 3 suffers from an expensive hand-off from machine to human, which will probably render it unviable. We are likely to see only Levels 1 and 2 from simulations, and at much later stages, 4 and 5 from real-world, unexpected driving scenarios.

When users collect and annotate all data, we will become 1000x better. In autonomous driving, it’s challenging but critical to tag all the data — more so than in search. As a side note, this is a key reason why I think LiDAR will continue to lag for a long time. There is too much complexity in making sense of LiDAR data in an automated way. Video — in comparison — is a cheap but extremely rich medium (the density of information is very high). And when the camera does not work (namely at night), neither does LiDAR (we can’t interpret point clouds for which there are no semantics and people can’t label what they don’t see) and the only true fallback is radar (or V2V). So, we need to stop solving problems that don’t scale on the tagging side. If we can’t automatically tag all the data — not just a ground truth dataset — we’re likely looking at this problem in the wrong way. What good is a driving algorithm if it only works in San Francisco and not in New York? How does an ADAS system help a taxi driver in Manhattan if it can’t detect a courier cyclist? Or a trucker in Wisconsin is not warned from a crossing deer? Do we wonder why only 1% of vehicles have ADAS? Price, complexity, availability, etc. are factors, but one of them is lack of product market fit. Each of these problems needs solving. But if each require manually labelled data in a lengthy and costly process we are into a losing proposition. We need to solve each of these problems, but in a more holistic way that does not require manual tagging. By approaching holistic learning models, we can devise a solution only limited by the amount of data we capture.

Which is exactly why at Nexar, we want to capture all the data — and more. When a user deletes a ride, it’s likely that ride was not interesting. Can we learn how to find non-interesting rides? When a user keeps a constant distance to the car in front and has no warnings, we learn what a safe distance is. When a fleet manager watches a video of one of their drivers, we know which videos are more important. When users approach a curve at a given point of entry, heading, speed and acceleration, they are telling us which is the optimal path. There are all just examples of many things we can and should capture in order to train models that do not rely on human manual annotation. Think Google.

We’ve learned we need to iterate constantly and continuously. Google runs hundreds of R&D cycles per week. Automotive companies run one R&D cycle every 3 to 5 years. Meanwhile, users are continuously collecting data, implicitly tagging data, and testing models as they drive. Even for a relatively fast and innovative company like Tesla, it has taken them over a year to re-introduce AutoPilot after the crash in 2016.

As we go forward, and not just Nexar but as an industry, we need to relentlessly observe, think, challenge, and solve how we do things everyday, so we continuously move faster in the direction of solving a life-saving big data problem thru leveraging all of us as drivers. Let’s (re)visit and (re)formulate what we do, and see if we are solving the end-user problems, or rather only intermediate steps.

Overall, when we look at the future we see a huge opportunity ahead to use software to learn from the 10 trillion miles humans drive every year and build solutions drastically better than what exists today. At Nexar, we have already proven many things along the way that demonstrate this approach is indeed the right way to build a big data deep learning application. We run non-trivial deep learning models on devices at the edge. We move millions of miles of driving knowledge per week to the cloud. We add new perception-to-warning models for driving in a week. We have market segments for which our application already provides tremendous value.

To get a taste of our approach and help us make the most of all the data we’re collecting, join us on our journey to eliminate car collisions. Join our open driving perception challenge here.