Imagining vision-based connected car camera applications

By 2024 most new car models will include several built-in cameras. Cameras are there for the cars to “see” — the road, cyclists, pedestrians, road signs and more. Most industry pundits focus on the use of car-sight as cars evolve to self-driving. Yet, even before, during and after self-driving becomes a reality, novel types of applications will be built on top of cameras. The most interesting ones will come from new uses for car-vision — and from enabling vision that goes beyond line-of-sight, i.e. vision that “sees” what the car (and the human inside it) cannot see.

If cameras (and the AI that powers them) go beyond the driving assist functions, what will they do? We are quickly moving into a state where we’ll have millions of eyes on the road. They could be leveraged to create crowd-sourced vision (a “shared visual memory” of the road) enhance the driving experience with vision-based applications, showing us the state of roads near and far, in real time and near real time.

This blog post will tackle the question of what types of applications we should expect when car cameras become the norm. Some applications won’t require a network of seeing cars, and some will. As you can probably guess, the really interesting applications require a network, because the network enables you to see what’s beyond your line of sight, by tapping into a larger visual memory of the road, powered by millions of eyes.

App family one: line-of-sight apps

First, let’s look at how camera-based vision can bring value to drivers — even when there is no camera network and apps are limited to line-of-sight only.

The car-selfie

Twenty years ago, no one imagined we’d be taking and sharing so many pictures of ourselves, our families, food, pets, household plants, the trips we take and more. Yet we do. The same instinct applies to driving (yet, hopefully people aren’t driving and taking pics at the same time): people want to share interesting driving experiences with others. Our experience with Nexar’s market-leading dash cams proves this is true. We see a lot of driver activity around creating and saving clips of driving and sharing them: animals crossing, spectacular sunsets, crazy weather. You can see some of them here.

This makes the first application for car cameras pretty self-evident: taking videos or photos of the road and sharing them, using a voice activated interface that prevents the risky behaviour of actually trying to film something while driving. We’ve seen great interest in this feature, both from car OS players and from our existing dash cam user base.

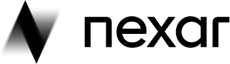

Sharing videos from the road is an example of apps that don’t require a network. Some camera based apps can work with even just one user in the world, while others require some network effect — partaking in a network of car cameras that share vision data. Sharing videos from the road, insurance applications and an app that records what happens if the car is hit or broken into (“sentry” — it also streams the video to the user’s phone) don’t require a network of other drivers. These applications can therefore be the first set of vision based apps and connected services for cars — a simple win for automakers.

Parking, security and groups

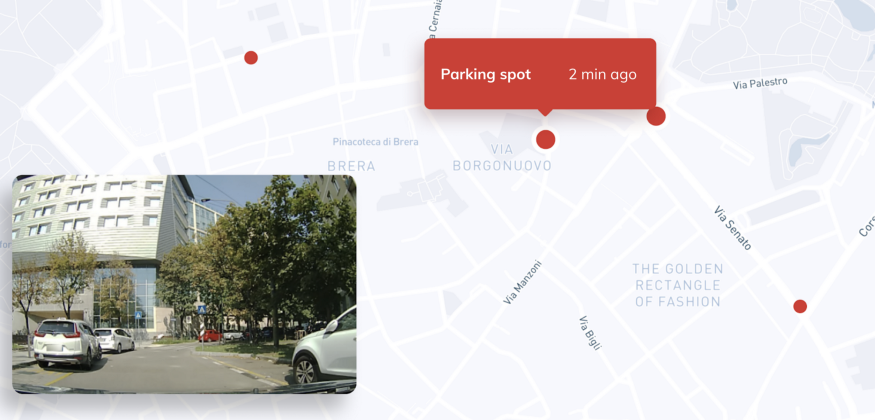

Another app that becomes valuable with vision is parking. For instance, answering the “where did I park” question by serving the latest video of parking, in case you forgot.

Another app in this family can show parking events that happened while you were away from the car, in case the car was hit or tampered with. Our market data and user base indicate that the ability to remotely see what is going on with the car — we call it sentry mode — has enormous potential, marrying security needs with dash cam functions. Since cars come with cameras both inside and outside the car, AI can also be used to provide additional details as to what is happening with the car, beyond the basic sensor inputs that come from a motion sensor and using additional vision AI.

Although sentry can work with just one camera, in the future, additional video from other cars in the area can and will provide additional insight into what is happening, possibly capturing the same incident from various angles and delivering cities with a better understanding of what is going on in the streets.

Group features are about sending messages to notify emergency contacts and also share videos from the road with them. This one is mostly used between spouses or in case a teenager is driving, and can also be used by fleets.

Insurance

Insurance, or the record of what actually happened in case of a collision, is what made people use dash cams in the first place. Dash cams give drivers a sense of peace and security: they have the record on their side.

However, as dash cams evolve, so does the use of insurance applications. Beyond the recording of an insurance event, the basic dash cam app is a one-click report that easily packages the relevant video and sensor data for submission to an insurer. This is just the beginning, though. Cameras have the potential of revolutionizing car insurance, by eliminating fraud, automating the claims processing and liability determination process, and helping drivers with quick and effective assistance once they’ve been in an accident. Additionally, cameras can create insights on people’s driving behaviours, and create novel ways of scoring drivers.

With vision AI, the insurer can automatically receive collision videos (together with GPS and other sensor data), ingest the data and know which drivers are in need of help. The same technology can be used to automatically “understand” collisions and then process claims faster, even giving insight as to the parties’ relative fault, through scene reconstruction that covers both the driver and the 3rd party’s actions.

App family two: non-line of sight apps

Non-line-of-sight apps are where unlocking the potential of millions of eyes on the road becomes truly compelling. By using vision, we can create a variety of insights of the road, insights that aren’t seen by the driver or the car, but can be extremely valuable to making the road safer and driving stress-free. Here are some of the apps that will come into play:

Navigation

Navigation can tell us a powerful lesson about what makes users share information into a crowd-sourced network. In short, users will happily share data if they can also benefit from the data shared by others.

But first, let’s begin with some history. In the beginning, we had various GPS-based navigation apps, each with its own map. The maps didn’t change much. Any real time information (a traffic jam that was building up, a car stuck on the roadside, extreme weather) was missing from the information presented to drivers. The spectacular rise of Waze, which invented crowd-sourced navigation changed the map (pun intended) completely. Using GPS data and user input, the map began updating — automatically noticing traffic, road blockages and more. What’s more, it was able to provide accurate Estimated Arrival Times, based on what was going on on the road, and suggest alternate routes based on this information. The crowd-sourcing of road data proved a compelling differentiator and created a killer app. Users were happy to share their data, since it was self-evident that the value they derived from the app was a result of other users sharing their data as well. That was a powerful incentive to share data.

When car cameras become common (and they will), they can drive the next phase of crowd-sourced data about the road.

Think of vision-based inputs to the crowd-sourced navigation system. Instead of just sensing that a car is slowing down, and then surmising the reason is traffic, as is done today with telemetry based inputs, a vision based approach to the world can literally take a look, understand input that’s way more complex and multi layered than telemetry data, and actually understand why traffic has slowed down. This means that drivers contributing to a navigation app will provide deeper information way beyond “traffic has slowed down” or manual inputs such as a driver indicating “car by the side of the road” or “police ahead”. Drivers consuming that data will also be able to visually verify the cause for traffic or any other road condition, which should provide them with additional value beyond just getting there on time.

Vision AI can detect many things. Work Zones, for instance, are detected by looking at the colors, signs, cones and barriers erected to safeguard construction. Such a detection can be input into the navigation app, so it can “see for itself” and inform others. Even today such detections are important, both for cities and transportation authorities seeking to monitor road encroachment, and for Autonomous Vehicles that try to avoid, as much as possible, driving by such construction zones. Other detections are free parking spots, or knowing whether a queue is forming at your favorite cafe or what is causing traffic.

Free parking spots is a great example of the superiority of vision data to telemetry data. Regarding telemetry, industry pundits estimate that, for each car, you can probably detect 3–4 parking spots a day, with an additional difficulty of inferring whether those parking spaces are indeed legitimate. This means that in order to run a network detecting free parking spots you would need many cars and would probably generate many false positives. With vision, the uber sensor, you can detect 30–100 free parking spots in one hour and use AI and vision to ensure that the spots detected are legitimate. The network required for the detections is smaller too, since there are more detections per car.

Safety

The next step in thinking about crowd-sourced vision is safety. Drivers (and cameras) will see what’s in their line of sight, as they have always done. But the same crowd-sourced memory (that’s timestamped) that tells them there is a parking spot that was detected 2 minutes ago, beyond their line of sight, can also alert them to safety issues, maybe ones that occurred seconds ago.

The idea that cars can communicate their status and provide a safer driving experience has been around for a long time, initially supported by DSRC, and today through the vision of many connected car initiatives. The idea is simple yet effective: if cars could tell each other what is going on (I just braked, there is a pedestrian crossing), safety would grow. The second recipient of such messages is infrastructure — the ability to communicate with traffic lights and more, so that traffic will be better managed. However, these non line of sight messages from neighboring cars can sound like a constant stream of “Me! Me! Me!” messages, with little context. This can almost become a nuisance. Imagine driving in a busy urban area and getting many messages about “sudden braking ahead”. Too many messages will make you ignore them.

What’s missing here is a common “memory” that moderates the messages, locates them and provides the context needed to make sense of the messages. As opposed to past approaches to vehicle to vehicle communication, where the messages didn’t require much bandwidth, adding vision data requires bandwidth and compute, all happening on the edge, for cost and latency reasons. That’s one of the reasons behind our Nexagon standard, since we came to the understanding that the future of vehicle to vehicle communications is vision powered shared memory as the secure, scalable approach to sharing information in real time.

Conclusion

Cameras on cars are about to create a new breed of applications, driven by information that’s beyond line of sight, adding a new sense to drivers, cities and autonomous vehicles and making them safer and better. This will happen through the creation of a common, shared memory that’s local and that can give us much better information about the road and its surroundings. Some of these applications can be imagined today; others aren’t, and we will need to wait and see what they are.